|

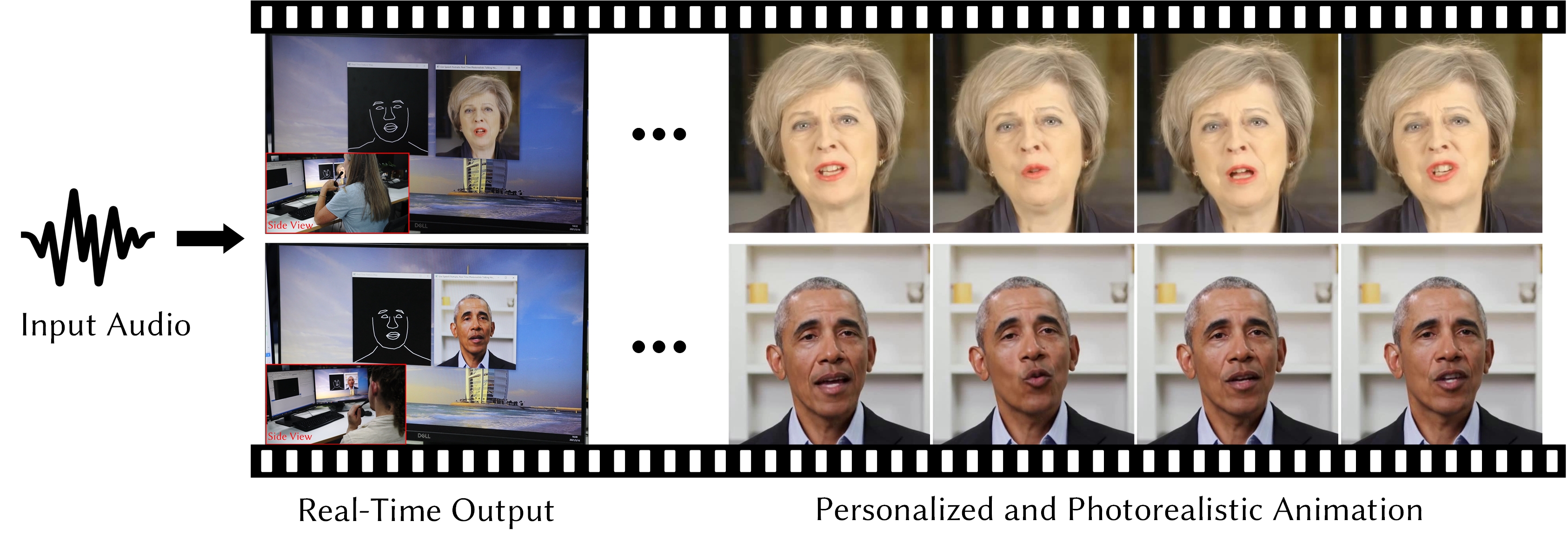

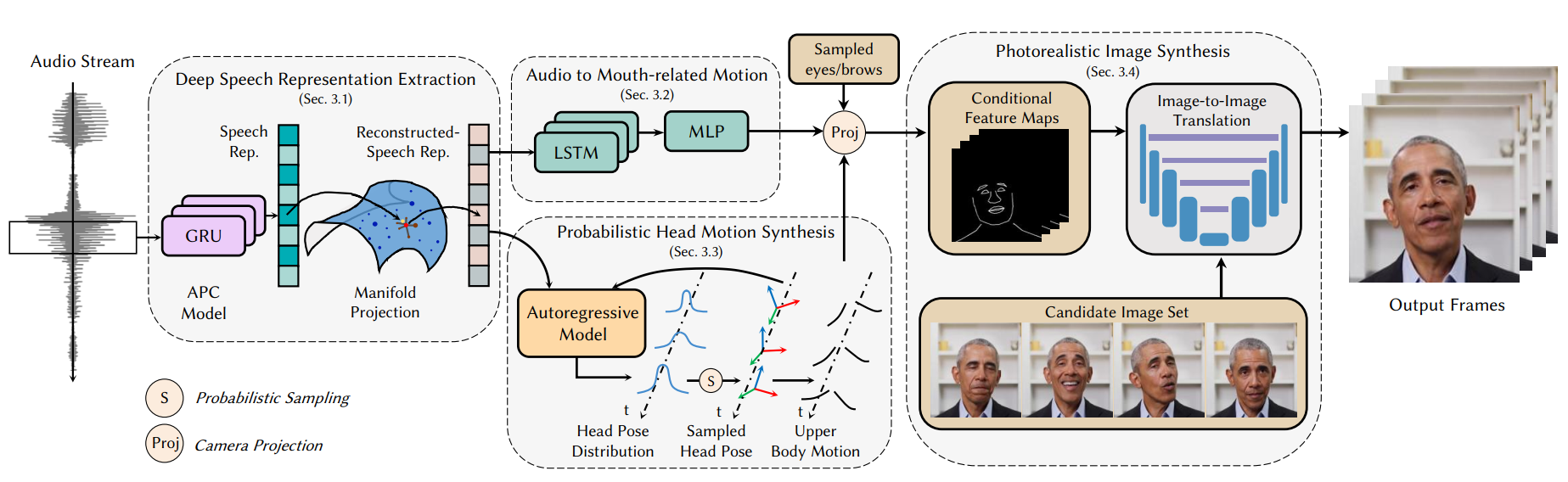

Abstract To the best of our knowledge, we first present a live system that generates personalized photorealistic talking-head animation only driven by audio signals at over 30 fps. Our system contains three stages. The first stage is a deep neural network that extracts deep audio features along with a manifold projection to project the features to the target person's speech space. In the second stage, we learn facial dynamics and motions from the projected audio features. The predicted motions include head poses and upper body motions, where the former is generated by an autoregressive probabilistic model which models the head pose distribution of the target person. Upper body motions are deduced from head poses. In the final stage, we generate conditional feature maps from previous predictions and send them with a candidate image set to an image-to-image translation network to synthesize photorealistic renderings. Our method generalizes well to wild audio and successfully synthesizes high-fidelity personalized facial details, e.g., wrinkles, teeth. Our method also allows explicit control of head poses. Extensive qualitative and quantitative evaluations, along with user studies, demonstrate the superiority of our method over state-of-the-art techniques.

|

|

Supplementary Video

|

|

Citation

|

|

Acknowledgments We would like to thank Shuaizhen Jing for the help with the Tensorrt implementation. We are grateful to Qingqing Tian for the facial capture. Yuanxun Lu would also like to thank Xinya Ji for her mental support and proof-reading during the project. This work was supported by the NSFC grant 62025108, 61627804 and Leading Technology of Jiangsu Basic Research Plan (BK20192003). |